Static website vs dynamic website: a sysadmin’s perspective

Every website can be categorized as either static or dynamic. In this post, we’re going to define a dynamic website as one that needs server-side processing to function, while a static website as one that serves the same content for all users. Another way of looking at it is that the content of a static website is pre-built and not generated on the server for each request.

Pros and cons

Each type of website serves a specific purpose, and it would be wrong to say that either one is better than the other.

Dynamic websites are what made the modern web possible. Webshops, forums, social media sites, web apps, etc. are all examples of dynamic websites.

Any website that provides a more complex functionality to the end-user will most likely be dynamic.

Nevertheless, not all websites need to be dynamic. A rule of thumb is that if your website purpose is to provide the content you create for your users such as a news portal, blog or your company site, the website can be a purely static website.

Let’s take a look at some benefits and disadvantages of static websites.

Static website benefits

One of the benefits of a static website is that it reduces server load.

Let’s take a look at a typical request cycle that happens with a dynamic site. In this example, we’re going to consider a classic LAMP stack with a proxy and a caching service. The request coming to the server is received by the proxy. The proxy then routes the request to Apache.

Apache has to invoke the PHP language interpreter. Once the interpreter gets the request, it usually needs to fetch data from MySQL or a caching service (Redis, Memcached, etc.) and then process it before returning the response to Apache that forwards the response to the proxy before arriving at the end-user.

When the number of requests is high, the server can quickly run out of available CPU and memory required to process the requests, which results in slower request resolution and the worst-case scenario, service downtime. To ensure such issues won’t happen, we’ll need more resources. This can be done by simply moving the database to a different server, or by using more complex infrastructures involving multiple web and database servers, as well as load balancers. The complexity of the infrastructure depends on the type of website and traffic volume.

However, for a static website, a request doesn’t need to be processed by a backend application, and no data needs to be fetched from other services. The majority of your server resources are available to serve readily available content to the end-user, meaning a faster resolution of each request. You can also serve your whole site via a CDN (content delivery network), which means you don’t need to worry about not being able to handle a large amount of traffic. Having a static website can reduce the overall complexity and cost of your infrastructure.

Another benefit of a static website is that it improves the security of your website. With static websites, you won’t need to worry about cross-site scripting attacks, SQL injections or any nefarious activity that messes with backend applications.

Even when using a popular CMS like WordPress, you can still be vulnerable. Over the years more, almost 300 vulnerabilities were discovered with WordPress. Serving your content directly from WordPress requires a lot of care. You must regularly update WordPress itself, the themes and plugins you use. Even though keeping everything up to date reduces the security risk, you can still be vulnerable to zero days exploits.

Backend languages can also be vulnerable. PHP itself had more than 600 vulnerabilities reported over the years. Regular updates of the language and modules is required. Another thing that we have to consider is security bugs in the application itself.

Static website disadvantages

Other than the use case limitation of static websites, one of the disadvantages of static websites is that they are harder to write.

Building a large static website without using a CMS (content management system) can be a complex and daunting task.

Converting a dynamically generated website to static

You can take advantage of the ease of use of a CMS and the benefits of having a static website. There are many available open-source static site generators you can use to generate your static site.

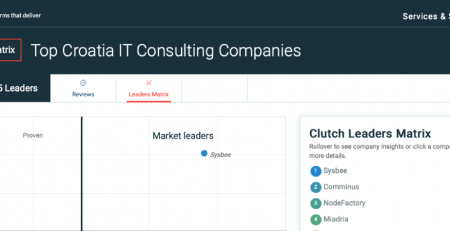

You can also use a dynamic CMS to create the content and then convert it to static resources. That’s how we at Sysbee created our website.

We use WordPress hosted on a server accessible only via a VPN to create the content, an AWS S3 bucket to host the static resources and AWS CloudFront to distribute the content to the end-user.

We decided to go with WordPress mostly because of its maturity, plugin rich ecosystem and ease of use for our editors. Since the access to the server is restricted only to our sysadmins and website editors, we can take a more relaxed approach to security and not worry that a plugin or theme update will break the functionality of the website. If it were the case that you alone manage your site, WordPress could be installed on your machine and still use the techniques we’re going to show to create a static website out of it.

Using an S3 bucket with website hosting enabled, reduces the need and cost of managing a separate server to host our static resource. It’s a highly available and robust hosting solution. It also integrates easily with the AWS CloudFront CDN and opens up the possibility of having some dynamic features by using AWS Lambda.

So how does one go from creating a site in WordPress to hosting it as a static website?

The simplest way is to use a WordPress plugin such as WP2Static. WP2Static is a great tool that allows users to automatically convert the content of their website to static resources and push it their desired hosting solution. However, the plugin lacked some feature we required, such as pushing the content to different buckets without having to do configuration changes, doing a partial website push and having a simple way to revert to previous versions of the website.

Having the ability to switch the push destination easily, gives you the benefit of having a staging area where you can see if you missed something during the push and if the website works as intended before going live. Doing a partial push is great if you need to push only part of the content. An example of when that would be useful is publishing a new blog post while making changes to different parts of the website that are not ready to go live.

Since the readily available tools lacked the features we needed, we’ve decided to develop our own dynamic to static converter.

How we did it

The workflow required to convert the content generated by WordPress to static and deploy it to S3 can be summarized with the following steps:

- crawl the website

- process the downloaded resources

- push the processed content to S3

- archive the resources

All of the necessary steps can be performed using command-line tools available on most systems, meaning it can be easily automated.

Now we will go into a bit more detail of how we implemented the previous steps.

Crawling the website

To crawl the website we’re using one the most popular and reliable tool available on most *nix systems, wget:

wget --timestamping -x -r -i list -o crawl.log

-x option preserves the directory structure of your site.

--timestamping is used for download optimization. It tells wget to look at the “last modified” header sent by server and compares the timestamp with the downloaded file. This way unchanged resources won’t be downloaded again.

-r tells wget to recursively crawl the site

-i list is used to specify the exact resources to download. While in most cases it would be unnecessary to use and one could simply specify the URL of the home page, it becomes a problem when you have resources that are not linked anywhere on the site or are defined in a non standard tag. In those situation the crawler will most likely miss them and the won’t be downloaded.

-o crawl.log just tells wget to store all the messages to a log file.

Processing the downloaded resources

If you use absolute paths on your website, you’re going to need to replace all the references of your WordPress URL to the domain where you are hosting the site.

find /path/to/downloaded/content -type f -print0 | xargs -0 sed -i 's/old_url/new_url/g'

Let’s break the command in two parts so it will be easier to understand.

find /path/to/downloaded/content -type f -print0

-type f tells find to look only for files in the dowloaded resources. This will omit directory names from being processed

-print0 will format the output by removing newlines

The output of the find command is then piped to:

xargs -0 sed -i 's/old_url/new_url/g'

xargs -0 formats and prepares the the input

sed -i 's/old_url/new_url/g' will replace all the instances of old_url to new_url in the files

Pushing the processed content to S3

Pushing the content is done using the s3cmd tool. It’s a free and open source command line tool, created specifically to interface with AWS S3.

s3cmd sync --config=/path/to/config_file --region=s3_region \ --delete-removed --no-mime-magic --guess-mime-type --acl-public \ --exclude "*.jpg" --exclude "*.png" \ /path/to/downloaded/content/ s3://your-bucket-url > prod_push.log

Let’s break down the parameters to better understand what is going on.

sync is used to sync the content located in /path/to/downloaded/content. One could push and overwrite all the content, but using sync is more efficient as it will push the changes only

--config=/path/to/config_file --region=s3_regionspecify the path to the credentials file for S3 and the region where the bucket is located

--delete-removed will delete objects in the s3 bucket that are not present in your downloaded content. It’s useful when you want to remove a page from the site that you also removed from WordPress.

When you use wget to download your files, if you use the –timestampingoption, you must have a copy of the previously downloaded files. However, wget will not remove your local copy of the files if it didn’t crawl it, which means the –delete-removedoption of s3cmd won’t work as intended. It’s recommended that you delete your local copy of the downloaded files first to remove the files from the bucket. Removing pages from the live site is not a common occurrence in our case, so it isn’t a problem for us, it just takes a minute longer for the process to complete. Nevertheless, a workaround for this problem would be nice

--no-mime-magic tells s3cmd not to use the python-magic library to set the MIME type of the object. We had some issues with the python-magic library. The problem was it wouldn’t recognize CSS files properly and would set the wrong mime type.

--guess-mime-type will set the mime type of the object based on the extension of the file. This solved our wrong mime type issue.

--acl-public will make the object publicly readable.

--exclude "*.jpg" --exclude "*.png" why are we excluding pictures from the push? They will be pushed on the bucket with the next command anyway, however we wanted to apply Cache-Contorl headers to pictures only. It’s not advisable to set this headers to a high value to resources that change in content often, but not in the name, such as your homepage. You don’t want your users to see an old copy of your page when there is a new one available. Pictures don’t change that often, so it’s safe to cache them for a long time.

s3cmd sync --config=/path/to/config_file --region=s3_region --delete-removed \ --no-mime-magic --guess-mime-type --acl-public --exclude "*" --include "*.jpg" \ --include "*.png" --add-header=Cache-Control:max-age=31557600 \ /path/to/downloaded/content s3://your-bucket-url >> prod_push.log

This command is very similar to the previous one. What we’re doing here is excluding all the content already pushed and including pictures only.

We also add the headers to the picture with the -add-header=Cache-Control:max-age=31557600option.

Another way this could have been done is to push the whole content and use the s3cmd modify command instead of s3cmd sync

It really doesn’t make much difference how you do it, the same amount of content will be synced to the bucket anyway.

This tool is really flexible, with a ton of options that allow you to fine tune your process.

Once the content is on the bucket we clear the CloudFront cache so that visitors are served the new version of the site.

aws cloudfront create-invalidation --distribution-id your_distribution_id --paths "/*"

Archiving the resources

Once the deployment is done, we archive the downloaded resources in case we need them for future use.

tar -czvf $(date +%F-%H-%M).tar.gz /path/to/downloaded/content/

The archive name is just a matter of preference. I like to have it in the YYYY-MM-DD-HH-mm.tar.gz format.

More flexibility

Some of you might have noticed that we have a twitter feed in our website footer. Doing a push every time someone tweets something is a hassle, and we needed a better solution for that. As mentioned before, having our site hosted in an S3 bucket gives us the possibility to integrate other AWS services easily. AWS Lambda seemed like the perfect solution for that.

We wrote a lambda function to pull the latest tweets, format them as HTML, push the created file to our bucket and use jQuery to display the content in the footer. CloudWatch triggers the lambda function at regular intervals. As you can see, it’s possible to take advantage of the benefits of having a static website while keeping some dynamic functionality.

The features that we lacked with WP2Static, can easily be implemented using the same commands we saw. Pushing to a different bucket can be done by modifying the s3cmd command destination.

Doing a partial website push requires altering the download list in the wget command. (Be careful not to run the s3cmd command with the –delete-removed flag in this case, as it will delete your whole site) and reverting to a previous version requires unpacking the archive and pushing the content.

Simplifying the process

To simplify the deployment process for our website editors, we created an HTTP API using Kapow! and a simple web interface to access the API.

Kapow! is an open-source tool that lets you create an HTTP API for your shell scripts. We decided to go with Kapow! as it fits our use case perfectly.

We developed the shell scripts before we decided to create the API, and Kapow! was an easy way to do that without having to change too much code. The same thing can, of course, be achieved using PHP, python or whatever language you feel most comfortable with.

We won’t go in the inner details of its working since it’s out of scope for this post (you can find it all out in the official documentation linked before) however we’re going to talk a bit about how to set it up.

Setting up kapow!

Kapow! uses so called pow files, in which you can define your API routes and which shell scripts or command to run. Given the relatively small amount of routes we’re using (11 to be precise) we found it easier to define each route in it’s own pow file. A larger project would probably require a different structure to be easier to manage.

The routes are created using the following shell script:

#!/bin/bash source /etc/kapow/kapow.conf $exec_path server <(/usr/bin/cat /etc/kapow/powfiles/*.pow)

In the /etc/kapow/kapow.conffile we define the $exec_path variable (path to the kapow executable) and some variables we’d like all the shell script share.

Having a startup script also simplified daemonizing kapow! using systemd.

Managing API routes

Since we’re using two different S3 buckets, one as a production environment and one as a staging environment , we decided to go with the following naming pattern

/kapow/{environment}/action

We’re also using two sets of shell scripts, one for each environment. Let’s take a look at the content of one pow file to get a better feel of how it works.

In this file we defined a route that invokes a script that reverts our live website to a previous version.

kapow route add '/kapow/prod/revert/{version}' -c 'kapow get /request/matches/version |bash /home/kapow/revert-prod.sh| kapow set /response/body'

kapow route add '/kapow/prod/revert/{version}' defines a new route named /kapow/prod/revert/{version}. In this context version is a varible.

kapow get /request/matches/version will match the {version} variable and then pipe it to the /home/kapow/revert-prod.shscript.

kapow set /response/body sets the response body. Since out script doesn’t return any output it will just return a 200 OK code.

All the other routes are defined in a similar fashion.

We found kapow! to be a great and flexible tool, an to be honest it’s fun to use. If you haven’t already tried it, we would definitely recommend you give it a go and let us know in the comments your opinion about it.

Conclusion

In this post we’ve gone through some of the benefits and disadvantages of static and dynamic websites. We also described some tips and tools we’re using at Sysbee to create our static website. What’s your opinion on the topic? Do you have any tips and tricks you use to create your own website?

Let us know in the comments below or get in touch , we’ll be happy to hear your suggestions!