Poor man’s performance and error tracing (ft. HAProxy)

It’s that time again. Yes, you got it right, another article in the series on how you can utilize HAProxy for more than just load balancing. Even more importantly, find out how it can help you as a DevOps guy to gain more insight into what’s happening in your stack.

Not all infrastructures are massively distributed, and implementing some distributed tracers would be overkill. If you are in some sort of tracing and insights needed for small or midsize infrastructures, do carry on reading.

For all of you big guys there using some advanced tracing tool, I invite you to skim through this post, as you might find some useful bits as well.

As we already mentioned in a previous post, HAProxy is a sysadmin’s swiss army knife. Now, let’s explore some other ways we tend to use this handy piece of software to keep tabs on how well the application is performing.

You can learn a lot from logs

I remember the first time I looked into default HAProxy log formats. The information was overwhelming, but it’s not that scary when you break things down a bit.

Feb 5 18:09:44 localhost haproxy[1275]: 192.168.1.15:58198 [05/Feb/2021:18:09:44.162] ft_eth0_cp0 bk_eth0_cp0/apache 0/0/0/80/80 200 636 - - --NI 1/1/0/0/0 0/0 "GET / HTTP/1.1"

Here’s what your default HAProxy HTTP logline might look like. The log format is very well documented in official documentation, so for the sake of keeping this article shorter, I will only focus on what’s interesting for this topic.

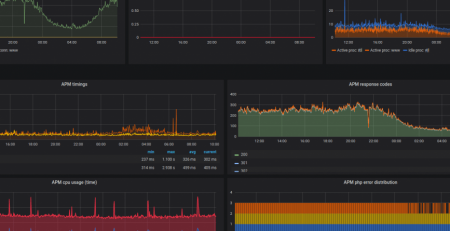

Timers

Right in front of the return status code (200), you will find a group of numbers (0/0/0/80/80) that are your request timers TR, Tw, Tc, Tr, and Ta.

You will find more detailed information in documentation, but here are TL;DR meanings of what those numbers are:

- TR – time (ms) spent waiting for a full HTTP request from the client

- Tw – time (ms) spent in various HAProxy queues (if configured)

- Tc – time (ms) spent waiting for the connection to the backend server to be established

- Tr – time (ms) spent waiting for the backend server to send the full HTTP response

- Ta – is the total time (ms) from the first byte of the request to the last byte processed

Armed with this knowledge, we can already draw lots of conclusions about what is exactly happening with the request.

If the client is having a poor connection to the server TR timer will usually be high (you want to aim as low as possible here).

If your requests spend a lot of time in queues, Tw will be higher and might indicate the client is throttled or the backend is overloaded.

Higher Tc can be observed when requests are retried and/or indicate some network-level issues to the backend server. It can also be indicative that the backend server itself might have some issues.

Finally, the Tr is what you might know as the TTFB (from HAProxy’s perspective), and this can be a good indicator of the backend application performance. Large values might indicate server overload or if other backend server metrics show poor application performance.

Ta will usually be a sum of all the above + some extra for data transfer, again a large difference of the sum of all previous timers, and this metric might indicate slow network connection for data transfer to the end client.

Exporting the timers in response

For the lazy or the brave, you don’t really need to look at the logs for this, you can also export the timers in HTTP response. Most common browsers nowadays support Server Timing API. By using them, you can inspect the timers in the Developers console for each request under Timers.

To get the above to show in your browser, all you need to do is place this line into your HAProxy frontend section

http-response set-header Server-Timing miss,\ 0_TR_client_wait;dur=%TR,\ _Tw_queues;dur=%Tw,\ 2_Tc_bak_connect;dur=%Tc,\ 3_Tr_bak_response;dur=%Tr

Session state at the disconnect

The next useful piece of information from the logs that I like to use a lot in debugging is the session state at disconnect (– -NI). These four letters can tell you a lot about what happened with the request and, the linked documentation has all the combinations covered.

In this example, we can see that no cookie was provided by the client, and one was inserted in the response.

There are many combinations, so I suggest you consult the manual about this field to learn the state for your specific request of interest.

Honorary mentions

While not directly related to inspecting the application performance, these are by no means less relevant information in the logs. As an exercise for the reader, you will need to look into the documentation to get more info about the following specifics:

- status codes – as we all know, this can be very useful in filtering good and bad requests

- bytes_read – knowing how much data was transferred can be useful while debugging some high Ta timers

- actconn, feconn, beconn, srv_conn, retries – again, not directly related to app performance but given the context around this connection states

All log lines are important, but some are more than others..

We can all agree that some log lines are not as important compared to others. You might have a bazillion of traffic, and keeping all the logs might be too expensive.

If that’s the case, I recommend you read this excellent post about log sampling.

In most of our use-cases, what makes sense is, having a sample rate for regular (non-error) requests and log all errors or requests of interest.

For example:

# Sampled logs: log 192.168.1.100:514 sample 1:10 local0 info # Error logs: log 192.168.1.101:514 local0 err

and then in the frontend

frontend www

bind :80

acl failed_request status 400 401 403 404 405 408 429 500 503

http-response set-log-level err if failed_request

Follow the trail

At this point, we have some insights on what’s happening and for how long, but this doesn’t really tell us much about why. Oftentimes you will need to follow those log lines along with the stack.

For example, let’s say we have HAProxy in front of 2 Apache web servers serving any number of backend applications via PHP-FPM pools.

In situations where you need to find out what service returned error 5xx, you can easily rule out HAProxy by session state at a disconnect, but the error could be returned either by Apache or the PHP itself. To be sure, you will need to inspect their logs as well.

Starting at the HAProxy logs can save you some time because you will find out where the request was routed. Even if you have a centralized logging set, you still need to correlate those requests by the requested URI, timestamp, and the client’s IP addresses, and this can be a nightmare on busy servers.

Every request is unique (and special)

Unique-id to the rescue!

I often like to say, this is a poor man’s distributed tracing. The idea here is to generate a unique id for each request and save it in logs, propagate that ID via headers to all downstream so they can also save the same request ID in their logs.

This makes life much easier when you need to find the same request in all log files.

To get things started, let’s place the following configuration into the HAProxy frontend.

# Generate unique request ID and assign the value to X-Req-ID header

unique-id-format %{+X}o\ %ci_%cp%fi%fp%Ts%rt

unique-id-header X-Req-ID

We are defining a hexadecimal form for the unique ID composed of the client’s IP address, client’s port, frontend’s IP address, frontend’s port, timestamp, and the request counter.

In the end, we are left with something like this:

B2DAA509_E6A0AC1F0A230050601D951A0004

In the second line, we are propagating that Unique ID via X-Req-ID header.

For now, HAProxy will just assign and forward this header. To log this unique ID we need to modify default log format.

# Standard log format

log-format "%ci:%cp [%tr] %ft %b/%s %TR/%Tw/%Tc/%Tr/%Ta %ST %B %CC %CS %tsc %ac/%fc/%bc/%sc/%rc %sq/%bq %ID %hr %hs %{+Q}r"

Those with the keen eyes will notice that this is just a standard HAProxy log format with inserted %ID. For the most part, the logline will look just like before.

Since we are forwarding the unique header, we can capture it in the most common web server log formats as well.

Let’s modify the Apache config.

# Combined log format with logging of X-Req-ID header

LogFormat "%a %l %u %t %{X-Req-ID}i \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined-tracing

All we need to do is use combined-tracing in the access log definition.

For the Nginx, you can use the following format:

log_format main '$remote_addr - $remote_user [$time_local] "$request" $status $body_bytes_sent "$http_referer" "$http_user_agent" "$http_x_forwarded_for" "$http_x_req_id"';

And for the PHP-FPM

access.format = "[%t] %m %{HTTP_X_REQ_ID}e %f %Q%q pid:%p took:%ds mem:%{mega}Mmb cpu:%C%% status:%s"

With the unique ID being logged in each service’s log file, you can use it to identify the specific request and its journey through the stack.

With the mentioned PHP access log definition, you can also trace the memory and CPU usage, as well as the processing time for each request.

Needless to say, even some microservices clusters can use this approach for correlating log lines with the X-Req-ID header, just modify your default log formats, and off you go.

Let’s return the header as well

Similarly to the timers, we can export this unique ID header to the browser.

This can be a huge time saver for developers to inspect their browser’s console and find the unique ID they can search in the logs.

It’s as simple as setting the following in HAProxy’s frontend section:

# return the unique-id via X-Req-ID on response for easier debugging http-response set-header X-Req-ID %ID

Make user errors pages helpful

Ever got the screenshot of a generic error 5xx or 4xx from the end-user?

Remember the time spent tracing that error. Dynamic error pages to the rescue. As described in the linked post from the official HAProxy blog, you can now serve dynamically generated error pages, which is super useful in tracing the errors.

By entering something like this in the frontend

http-response return status 500 content-type "text/html; charset=utf-8" lf-file /etc/haproxy/errors/500.html if { status 500 }

and creating the error page with the following content

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title>500 Internal server error</title>

</head>

<body>

<main>

<h1>500 Ooops something went wrong :( </h1>

<p>Something went very very wrong with your request</p>

<p>When reporting this error, please include the following</p>

<p>Unique ID: %[unique-id]</p>

</main>

</body>

</html>

When triggering a 500 error from the backend, the end-user will get the following error page

Yes, we can all agree that I’m a lousy front-end designer. I tend to leave that to the professionals. 🙂

Anyway, getting a unique request ID in an error report is a killer feature. Once we can implement it in an HTML page like this, the sky is the limit. We can make this page more user-friendly to encourage error reporting or even capture it for Sentry breadcrumbs.

One additional benefit of this approach is hiding possibly sensitive application error tracebacks. It’s not uncommon to see database credentials or user-sensitive information in them. Having HAProxy intercept those errors is an added security benefit.

What else can you use?

Armed with the knowledge of how you can trace the HTTP request in multiple log files and have some performance insights, one might wonder what else your favourite LB offers. As usual, it depends on the case you have. Some might need to track specific headers or cookies in the logs.

I hope that this article gave you at least some new ideas or insights into what is doable to make every DevOps’ day-to-day tasks a bit easier.

I invite you to keep checking our blog for future tips and tricks. As always, if you have questions or a tip of your own, share it with us in comments or via e-mail.