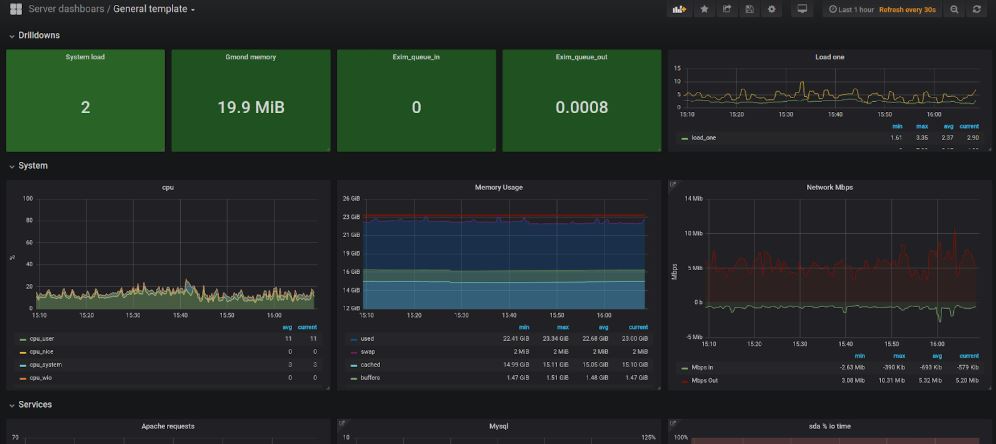

Monitoring origins: Where we are today

Continuing on our last blog post Monitoring origins: Where it all began, in this post, we’ll be discussing the most popular monitoring setups currently used by IT technicians around the world.

Today, TSDBs dominate the monitoring world. TSDBs are specialized databases designed to store large amounts of time-indexed data.

We can say that both RRD and carbon/whisper belonged to the definition of TSDB as well. However, the term became a buzzword only around 2013 with the appearance of two significant players in the field, InfluxDB and Prometheus.

Influxdb vs Prometheus

Essentially, both databases have the task of collecting metrics. They both do it exceptionally well, and we can easily say that they both have an equally enviable performance – specific benchmarks show ingestion rates of over 300,000 metrics per second on commodity hardware.

From our perspective, InfluxDB is much more similar to the model we’re used to working with so far. InfluxDB offers us some additional benefits that we feel are essential in developing business solutions, so we’ll be focusing on the TICK stack during the rest of this post.

Most things can be very easily accomplished similarly through Prometheus.

Below you can see a quick side-by-side comparison of both models.

- OpenCore

- HA support (paid version)

- Kapacitor

- Push

- Single binary collector

- Interoperability

- Multiple databases per server

- Numeric + String

- Rollup

- OpenSource

- Large fast growing community

- AlertManager

- Pull

- Multiple exporters

- Slightly better PromQL

- Numeric

TICK Stack

TICK is an abbreviation of the InfluxData product group: Telegraf, Influx, Chronograf and Capacitor.

Telegraf

The Telegraf is a single go binary agent that contains over 200+ available plugins for collecting metrics from various sources. After collecting the metrics, the Telegraf sends those metrics via HTTP protocol to InfluxDB. In addition to standard plugins, it is also very easy to extend metric collection through custom executables.

For example, if we were to collect data from PowerDNS via custom shell script, similar to Ganglia’s gmetric, we can easily collect external monitoring data and inject it via telegraf into our metric store.

Below is an example of such configuration:

cat telegraf.d/pdns.conf

[[inputs.exec]]

commands = ["/etc/telegraf/pdns-recursor.sh"]

timeout= "5s"

name_suffix = "_pdns"

data_format = "json"

And this is an example of an executed script itself. A very simple curl request toward PDNS’s json stats page, which is passed to jq binary to transform it into an acceptable series collection for InfluxDB

#!/bin/bash

curl -s -H "X-API-Key: XXXXXXXXX" http://127.0.0.1:8082/api/v1/servers/localhost/statistics \

|jq 'reduce .[] as $item({}; . + { ($item.name): ($item.value|tonumber)})'

With this few lines of code, we end up with a handful of very useful metrics from PDNS that we can use to debug and / or monitor the performance of the DNS server itself.

Another good example would be the StatsD interface.

StatsD is quite well-established in all mainstream programming languages and frameworks, and Telegraf offers native support for it. The vast majority of various software supports exposing specific metrics through statsd. As a result, developers can set specific points in the application where they want to gather monitoring information and push them to Telegraf via StatsD protocol.

We can easily enable it in Telegraf configuration file like so:

[[inputs.statsd]]

protocol = "udp"

max_tcp_connections = 250

service_address = "127.0.0.1:8125"

delete_gauges = true

delete_counters = true

delete_sets = true

delete_timings = true

percentiles = [90]

metric_separator = "_"

parse_data_dog_tags = false

allowed_pending_messages = 10000

percentile_limit = 1000

And then we can for example install PHP extension apm (application performance monitoring) with pecl command. Also adding this block of config into php.ini.

pecl install apm [root@host ~]#cat /etc/php.d/40-apm.ini extension = apm.so apm.store_stacktrace = Off apm.store_ip = Off apm.store_cookies = Off apm.store_post = Off apm.statsd_exception_mode = 0 apm.statsd_error_reporting = E_ALL| E_STRICT apm.statsd_enabled = On apm.statsd_host = localhost apm.statsd_port = 8125

As a result, we have some basic information regarding the performance of the PHP applications running on that host. For example, the average response time of the PHP codebase, how much CPU time it consumed, what was the error distribution, what response status codes it produced, etc.

Such information is priceless for high SLA requirement projects and debugging issues by correlating it with codebase changes.

InfluxDB

We’re moving on to the next layer in the TICK stack, which is InfluxDB. As the diagram below indicates, it’s a purpose built Time series database.

Some of its most notable functionalities are:

- SQL like query language

- Functional query language Flux – currently in beta.

- Organization of data within

- databases

- retention policies

- measurements

- series

InfluxDB, as a database has the option to execute a continuous query. You can think of it as a perpetual query that runs forever in the background. These type of queries are most commonly used to reorganize data within a database or data rollup to another retention policy.

Unlike RRD based systems InfluxDB’s disk IO pressure is not as large.

However, because of the additional metadata in the form of tags, disk space footprint is slightly larger.

Chronograf

We will only briefly mention the chronograph as an option, as we don’t use it in our monitoring setup. Chronograf is a web frontend for displaying metrics and communicating with Kapacitor.

For everyday use, we’ve replaced it with Grafana as it offers more powerful visualization options.

Kapacitor

The Kapacitor is, as the image below mentions, a real-time streaming data processing engine.

We consider Kapacitor a “TICK Stack Swiss knife” of sorts becausewe can use it for a lot of different use cases.

InfluxData initially designed Kapacitor for alerting and anomaly detection. Below, we have one example of a TICK script which performs alerting based on anomaly detection on user CPU usage.

We are looking into CPU measurement, precisely the value of the CPU-total series, and calculate average usage compared to a period of 5 minutes. If that average exceeds 80%, we send an alert to the slack channel and mail.

// cpu.tick

stream

| from ()

.measurement ('cpu')

| where (lambda: "cpu" == 'cpu-total')

| window ()

.period(5m)

.every(1m)

| mean('usage_user')

.as('mean_usage_user')

| alert()

.crit(lambda: "mean_usage_user" > 80)

.message('cpu usage high!')

.slack()

.channel('alerts')

.email('oncall@example.com')

We can also use Kapacitor to transform the Influx’s PUSH model into a PULL model, much like the Prometheus. Moreover, it can scrape Prometheus exported resources and put them in InfluxDB. We can also use Kapacitor to replicate data from one InfluxDB server to another, or for data downsampling.

Here you can see another example where we are doing data downsampling. On the same CPU measurement example, we take a window of 5 minutes every 5 minutes, and we calculate the average. We then store these metrics in a database Telegraf in a retention policy named 5m and add extra tags to them.

// batch_cpu-tick

batch

| query('''

SELECT mean ("usage_user") AS usage_user

FROM "telegraf"."autogen","cpu"

''')

.period(5m)

.every(5m)

| influxDBOut()

.database('telegraf')

.retentionPolicy('5m')

.tag('source','kapacitor')

This is an excellent trick to reduce data storage footprint used for storing metrics. Usually, you only require a short period of high granularity metrics that are collected by default with 15s interval.

Those metrics are exact; however, they take a large amount of storage space. With this kind of downsampling, we can have long term storage for fewer granularity metrics for lengthier periods.

Conclusion

Compared to the dark ages of 15 years ago, we have moved far ahead in terms of monitoring and insight into the status of servers and services. Nowadays, some call it observability, not monitoring, but that’s a different topic.

The point is that today, there is a large set of tools that can give us a better picture of the status of our products. With a large number of pre-built plugins and metrics to collect, there is no excuse not to do detailed monitoring.

The goal we have in Sysbee is to always keep our monitoring systems up to date with current standards and technologies. We also believe in a philosophy that there is no such thing as too-much metrics. With this kind of setup, even metrics that are useless today during regular operation can provide a crucial point for debugging issues in the future.

We also like to keep all this collected data available to end-users while enabling and encouraging them to load their metrics into the same monitoring system.

Lastly, we hope we’ve brought monitoring and metrics collection closer to you, and you’ll start using some of these tools in your day-to-day work. Of course, if you ever need help with it, our engineers are here for you .